View Jupyter notebook on the GitHub.

AutoML#

This notebooks covers AutoML utilities of ETNA library.

Table of contents

Hyperparameters tuning

How Tune works

Example

General AutoML

How Auto works

Example

Using custom pipeline pool

[1]:

!pip install "etna[auto, prophet]" -q

[2]:

import warnings

warnings.filterwarnings("ignore")

[3]:

import pandas as pd

from etna.datasets import TSDataset

from etna.metrics import SMAPE

from etna.models import LinearPerSegmentModel

from etna.models import NaiveModel

from etna.pipeline import Pipeline

from etna.transforms import DateFlagsTransform

from etna.transforms import LagTransform

[4]:

HORIZON = 14

1. Hyperparameters tuning#

It is a common task to tune hyperparameters of existing pipeline to improve its quality. For this purpose there is an etna.auto.Tune class, which is responsible for creating optuna study to solve this problem.

In the next sections we will see how it works and how to use it for your particular problems.

1.1 How Tune works#

During init Tune accepts pipeline, its tuning parameters (params_to_tune), optimization metric (target_metric), parameters of backtest and parameters of optuna study.

In fit the optuna study is created. During each trial the sample of parameters is generated from params_to_tune and applied to pipeline. After that, the new pipeline is checked in backtest and target metric is returned to optuna framework.

Let’s look closer at params_to_tune parameter. It expects dictionary with parameter names and its distributions. But how this parameter names should be chosen?

1.1.1 set_params#

We are going to make a little detour to explain the set_params method, which is supported by ETNA pipelines, models and transforms. Given a dictionary with parameters it allows to create from existing object a new one with changed parameters.

First, we define some objects for our future examples.

[5]:

model = LinearPerSegmentModel()

transforms = [

LagTransform(in_column="target", lags=list(range(HORIZON, HORIZON + 10)), out_column="target_lag"),

DateFlagsTransform(out_column="date_flags"),

]

pipeline = Pipeline(model=model, transforms=transforms, horizon=HORIZON)

Let’s look at simple example, when we want to change fit_intercept parameter of the model.

[6]:

model.to_dict()

[6]:

{'fit_intercept': True,

'kwargs': {},

'_target_': 'etna.models.linear.LinearPerSegmentModel'}

[7]:

new_model_params = {"fit_intercept": False}

new_model = model.set_params(**new_model_params)

new_model.to_dict()

[7]:

{'fit_intercept': False,

'kwargs': {},

'_target_': 'etna.models.linear.LinearPerSegmentModel'}

Great! On the next step we want to change the fit_intercept of model inside the pipeline.

[8]:

pipeline.to_dict()

[8]:

{'model': {'fit_intercept': True,

'kwargs': {},

'_target_': 'etna.models.linear.LinearPerSegmentModel'},

'transforms': [{'in_column': 'target',

'lags': [14, 15, 16, 17, 18, 19, 20, 21, 22, 23],

'out_column': 'target_lag',

'_target_': 'etna.transforms.math.lags.LagTransform'},

{'day_number_in_week': True,

'day_number_in_month': True,

'day_number_in_year': False,

'week_number_in_month': False,

'week_number_in_year': False,

'month_number_in_year': False,

'season_number': False,

'year_number': False,

'is_weekend': True,

'special_days_in_week': (),

'special_days_in_month': (),

'out_column': 'date_flags',

'_target_': 'etna.transforms.timestamp.date_flags.DateFlagsTransform'}],

'horizon': 14,

'_target_': 'etna.pipeline.pipeline.Pipeline'}

[9]:

new_pipeline_params = {"model.fit_intercept": False}

new_pipeline = pipeline.set_params(**new_pipeline_params)

new_pipeline.to_dict()

[9]:

{'model': {'fit_intercept': False,

'kwargs': {},

'_target_': 'etna.models.linear.LinearPerSegmentModel'},

'transforms': [{'in_column': 'target',

'lags': [14, 15, 16, 17, 18, 19, 20, 21, 22, 23],

'out_column': 'target_lag',

'_target_': 'etna.transforms.math.lags.LagTransform'},

{'day_number_in_week': True,

'day_number_in_month': True,

'day_number_in_year': False,

'week_number_in_month': False,

'week_number_in_year': False,

'month_number_in_year': False,

'season_number': False,

'year_number': False,

'is_weekend': True,

'special_days_in_week': (),

'special_days_in_month': (),

'out_column': 'date_flags',

'_target_': 'etna.transforms.timestamp.date_flags.DateFlagsTransform'}],

'horizon': 14,

'_target_': 'etna.pipeline.pipeline.Pipeline'}

Ok, it looks like we managed to do this. On the last step we are going to change is_weekend flag of DateFlagsTransform inside our pipeline.

[10]:

new_pipeline_params = {"transforms.1.is_weekend": False}

new_pipeline = pipeline.set_params(**new_pipeline_params)

new_pipeline.to_dict()

[10]:

{'model': {'fit_intercept': True,

'kwargs': {},

'_target_': 'etna.models.linear.LinearPerSegmentModel'},

'transforms': [{'in_column': 'target',

'lags': [14, 15, 16, 17, 18, 19, 20, 21, 22, 23],

'out_column': 'target_lag',

'_target_': 'etna.transforms.math.lags.LagTransform'},

{'day_number_in_week': True,

'day_number_in_month': True,

'day_number_in_year': False,

'week_number_in_month': False,

'week_number_in_year': False,

'month_number_in_year': False,

'season_number': False,

'year_number': False,

'is_weekend': False,

'special_days_in_week': (),

'special_days_in_month': (),

'out_column': 'date_flags',

'_target_': 'etna.transforms.timestamp.date_flags.DateFlagsTransform'}],

'horizon': 14,

'_target_': 'etna.pipeline.pipeline.Pipeline'}

As we can see, we managed to do this.

1.1.2 params_to_tune#

Let’s get back to our initial question about params_to_tune. In our optuna study we are going to sample each parameter value from its distribution and pass it into pipeline.set_params method. So, the keys for params_to_tune should be a valid for set_params method.

Distributions are taken from etna.distributions and they are matching optuna.Trial.suggest_ methods.

For example, something like this will be valid for our pipeline defined above:

[11]:

from etna.distributions import CategoricalDistribution

example_params_to_tune = {

"model.fit_intercept": CategoricalDistribution(choices=[False, True]),

"transforms.1.is_weekend": CategoricalDistribution(choices=[False, True]),

}

This custom dict could be passed into Tune class. This will be shown in the Example below.

There are some good news: it isn’t necessary for our users to define params_to_tune, because we have a default grid for many of our classes. The default grid is available by calling params_to_tune method on pipeline, model or transform. Let’s check our pipeline:

[12]:

pipeline.params_to_tune()

[12]:

{'model.fit_intercept': CategoricalDistribution(choices=[False, True]),

'transforms.1.day_number_in_week': CategoricalDistribution(choices=[False, True]),

'transforms.1.day_number_in_month': CategoricalDistribution(choices=[False, True]),

'transforms.1.day_number_in_year': CategoricalDistribution(choices=[False, True]),

'transforms.1.week_number_in_month': CategoricalDistribution(choices=[False, True]),

'transforms.1.week_number_in_year': CategoricalDistribution(choices=[False, True]),

'transforms.1.month_number_in_year': CategoricalDistribution(choices=[False, True]),

'transforms.1.season_number': CategoricalDistribution(choices=[False, True]),

'transforms.1.year_number': CategoricalDistribution(choices=[False, True]),

'transforms.1.is_weekend': CategoricalDistribution(choices=[False, True])}

Now we are ready to use it in practice.

1.2 Example#

1.2.1 Loading data#

Let’s start by loading example data.

[13]:

df = pd.read_csv("data/example_dataset.csv")

df.head()

[13]:

| timestamp | segment | target | |

|---|---|---|---|

| 0 | 2019-01-01 | segment_a | 170 |

| 1 | 2019-01-02 | segment_a | 243 |

| 2 | 2019-01-03 | segment_a | 267 |

| 3 | 2019-01-04 | segment_a | 287 |

| 4 | 2019-01-05 | segment_a | 279 |

[14]:

full_ts = TSDataset(df, freq="D")

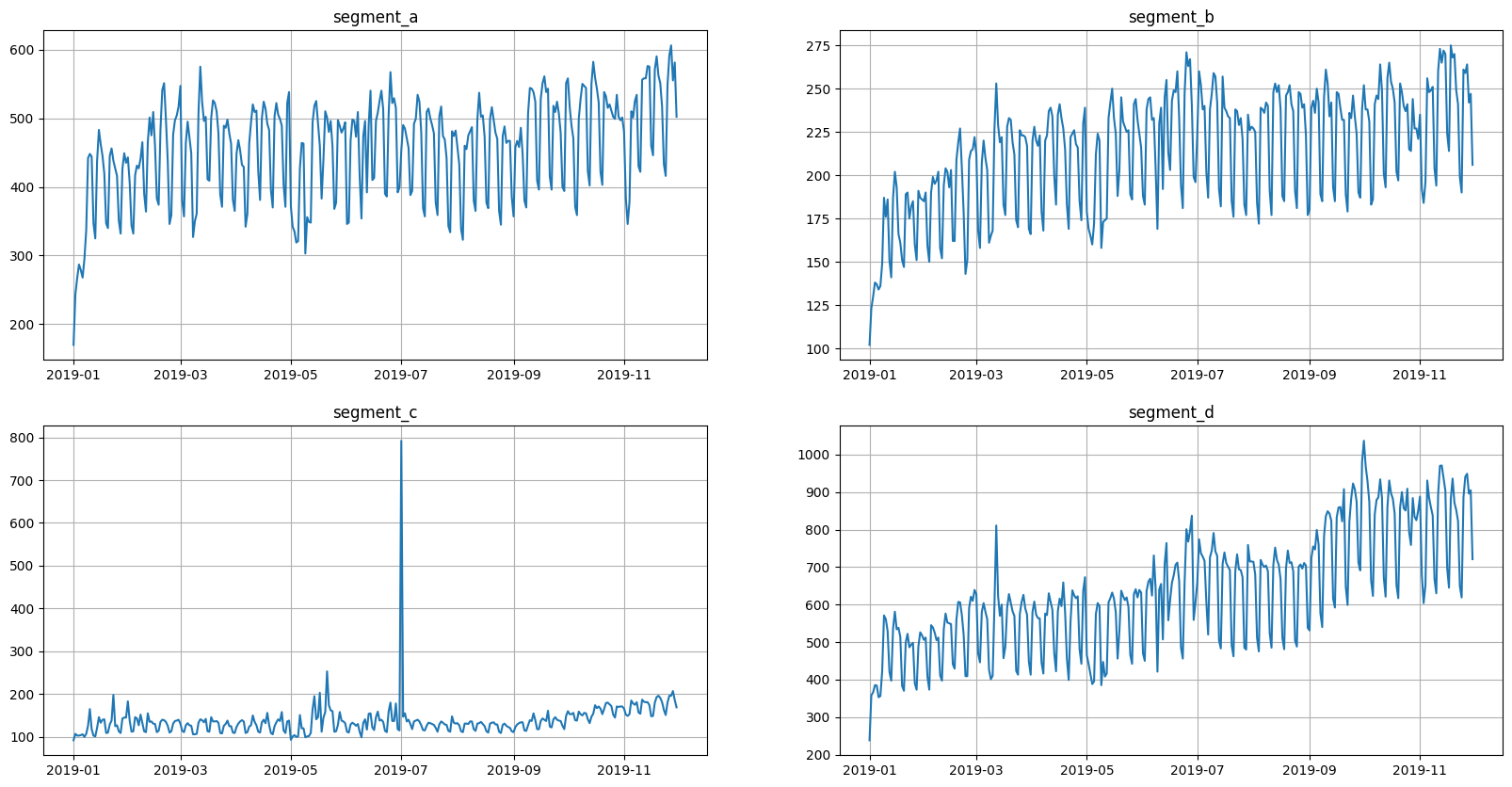

full_ts.plot()

Let’s divide current dataset into train and validation parts. We will use validation part later to check final results.

[15]:

ts, _ = full_ts.train_test_split(test_size=HORIZON * 5)

1.2.2 Running Tune#

We are going to define our Tune object:

[16]:

from etna.auto import Tune

tune = Tune(pipeline=pipeline, target_metric=SMAPE(), horizon=HORIZON, backtest_params=dict(n_folds=5))

We used mostly default parameters for this example. But for your own experiments you might want to also set up other parameters.

For example, parameter runner allows you to run tuning in parallel on a local machine, and parameter storage makes it possible to store optuna results on a dedicated remote server.

For a full list of parameters we advise you to check our documentation.

Let’s hide the logs of optuna, there are too many of them for a notebook.

[17]:

import optuna

optuna.logging.set_verbosity(optuna.logging.CRITICAL)

Let’s run the tuning

[18]:

%%capture

best_pipeline = tune.fit(ts=ts, n_trials=20)

Command %%capture just hides the output.

1.2.3 Running Tune with custom params_to_tune#

Let’s remember that earlier we created a dict:

example_params_to_tune = {

"model.fit_intercept": CategoricalDistribution(choices=[False, True]),

"transforms.1.is_weekend": CategoricalDistribution(choices=[False, True]),

}

Now we can use these parameters when initializing Tune.

[19]:

tune_custom_params = Tune(

pipeline=pipeline,

target_metric=SMAPE(),

horizon=HORIZON,

backtest_params=dict(n_folds=5),

params_to_tune=example_params_to_tune,

)

Let’s run the tuning with our custom parameters.

[20]:

%%capture

best_pipeline_custom_params = tune_custom_params.fit(ts=ts, n_trials=20)

1.2.4 Analysis#

In the last section dedicated to Tune we will look at methods for result analysis.

First of all there is summary method that shows us the results of optuna trials.

[21]:

tune.summary()

[21]:

| pipeline | hash | Sign_mean | Sign_median | Sign_std | Sign_notna_size | Sign_percentile_5 | Sign_percentile_25 | Sign_percentile_75 | Sign_percentile_95 | ... | MSE_percentile_95 | MedAE_mean | MedAE_median | MedAE_std | MedAE_notna_size | MedAE_percentile_5 | MedAE_percentile_25 | MedAE_percentile_75 | MedAE_percentile_95 | state | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Pipeline(model = LinearPerSegmentModel(fit_int... | f4f02e1d5f60b8f322a4a8a622dd1c1e | -0.478571 | -0.500000 | 0.177712 | 4.0 | -0.672857 | -0.621429 | -0.357143 | -0.254286 | ... | 2953.865443 | 22.334611 | 21.000232 | 6.989627 | 4.0 | 14.955846 | 18.861388 | 24.473455 | 31.581505 | TrialState.COMPLETE |

| 1 | Pipeline(model = LinearPerSegmentModel(fit_int... | 3d7b7af16d71a36f3b935f69e113e22d | -0.485714 | -0.457143 | 0.209956 | 4.0 | -0.745714 | -0.642857 | -0.300000 | -0.265714 | ... | 3294.855806 | 23.389796 | 22.762122 | 7.345652 | 4.0 | 14.897792 | 19.344439 | 26.807479 | 32.760543 | TrialState.COMPLETE |

| 2 | Pipeline(model = LinearPerSegmentModel(fit_int... | 7c7932114268832a5458acfecfb453fc | -0.271429 | -0.200000 | 0.229017 | 4.0 | -0.581429 | -0.392857 | -0.078571 | -0.061429 | ... | 4209.624737 | 23.336111 | 22.572681 | 10.435228 | 4.0 | 11.235277 | 18.503043 | 27.405750 | 36.505748 | TrialState.COMPLETE |

| 3 | Pipeline(model = LinearPerSegmentModel(fit_int... | b7ac5f7fcf9c8959626befe263a9d561 | -0.085714 | 0.000000 | 0.182946 | 4.0 | -0.340000 | -0.100000 | 0.014286 | 0.048571 | ... | 5665.228696 | 33.937644 | 35.976862 | 14.941386 | 4.0 | 14.444379 | 27.282228 | 42.632278 | 50.576005 | TrialState.COMPLETE |

| 4 | Pipeline(model = LinearPerSegmentModel(fit_int... | e928929f89156d88ef49e28abaf55847 | -0.421429 | -0.414286 | 0.179994 | 4.0 | -0.620000 | -0.585714 | -0.250000 | -0.232857 | ... | 3181.592755 | 25.265089 | 23.166650 | 11.452719 | 4.0 | 13.001779 | 18.666844 | 29.764896 | 40.466215 | TrialState.COMPLETE |

| 5 | Pipeline(model = LinearPerSegmentModel(fit_int... | 3b4311d41fcaab7307235ea23b6d4599 | -0.385714 | -0.400000 | 0.343749 | 4.0 | -0.788571 | -0.514286 | -0.271429 | 0.037143 | ... | 4837.444681 | 32.276030 | 35.792514 | 14.113259 | 4.0 | 13.499409 | 24.106508 | 43.962035 | 46.129572 | TrialState.COMPLETE |

| 6 | Pipeline(model = LinearPerSegmentModel(fit_int... | 74065ebc11c81bed6a9819d026c7cd84 | -0.435714 | -0.442857 | 0.213212 | 4.0 | -0.672857 | -0.621429 | -0.257143 | -0.188571 | ... | 4802.299660 | 24.936077 | 27.304852 | 7.183649 | 4.0 | 15.108636 | 21.478207 | 30.762723 | 31.447233 | TrialState.COMPLETE |

| 7 | Pipeline(model = LinearPerSegmentModel(fit_int... | b0d0420255c6117045f8254bf8f377a0 | -0.464286 | -0.442857 | 0.225312 | 4.0 | -0.725714 | -0.657143 | -0.250000 | -0.232857 | ... | 3688.168155 | 25.819143 | 28.393903 | 7.493711 | 4.0 | 15.618131 | 21.989342 | 32.223704 | 32.415490 | TrialState.COMPLETE |

| 8 | Pipeline(model = LinearPerSegmentModel(fit_int... | 25dcd8bb095f87a1ffc499fa6a83ef5d | -0.457143 | -0.457143 | 0.230350 | 4.0 | -0.705714 | -0.671429 | -0.242857 | -0.208571 | ... | 3154.538337 | 24.289797 | 22.380642 | 10.391095 | 4.0 | 13.252341 | 19.168974 | 27.501465 | 38.000072 | TrialState.COMPLETE |

| 9 | Pipeline(model = LinearPerSegmentModel(fit_int... | 3f1ca1759261598081fa3bb2f32fe0ac | -0.435714 | -0.414286 | 0.253446 | 4.0 | -0.725714 | -0.657143 | -0.192857 | -0.175714 | ... | 3611.477391 | 26.488927 | 23.750327 | 11.973486 | 4.0 | 14.242057 | 20.027917 | 30.211337 | 42.569838 | TrialState.COMPLETE |

| 10 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8363309e454e72993f86f10c7fc7c137 | -0.185714 | -0.157143 | 0.196396 | 4.0 | -0.431429 | -0.328571 | -0.014286 | 0.020000 | ... | 3526.513999 | 21.682156 | 17.027383 | 13.846262 | 4.0 | 9.110958 | 11.100846 | 27.608693 | 40.770037 | TrialState.COMPLETE |

| 11 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8363309e454e72993f86f10c7fc7c137 | -0.185714 | -0.157143 | 0.196396 | 4.0 | -0.431429 | -0.328571 | -0.014286 | 0.020000 | ... | 3526.513999 | 21.682156 | 17.027383 | 13.846262 | 4.0 | 9.110958 | 11.100846 | 27.608693 | 40.770037 | TrialState.COMPLETE |

| 12 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8363309e454e72993f86f10c7fc7c137 | -0.185714 | -0.157143 | 0.196396 | 4.0 | -0.431429 | -0.328571 | -0.014286 | 0.020000 | ... | 3526.513999 | 21.682156 | 17.027383 | 13.846262 | 4.0 | 9.110958 | 11.100846 | 27.608693 | 40.770037 | TrialState.COMPLETE |

| 13 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8363309e454e72993f86f10c7fc7c137 | -0.185714 | -0.157143 | 0.196396 | 4.0 | -0.431429 | -0.328571 | -0.014286 | 0.020000 | ... | 3526.513999 | 21.682156 | 17.027383 | 13.846262 | 4.0 | 9.110958 | 11.100846 | 27.608693 | 40.770037 | TrialState.COMPLETE |

| 14 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8363309e454e72993f86f10c7fc7c137 | -0.185714 | -0.157143 | 0.196396 | 4.0 | -0.431429 | -0.328571 | -0.014286 | 0.020000 | ... | 3526.513999 | 21.682156 | 17.027383 | 13.846262 | 4.0 | 9.110958 | 11.100846 | 27.608693 | 40.770037 | TrialState.COMPLETE |

| 15 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8363309e454e72993f86f10c7fc7c137 | -0.185714 | -0.157143 | 0.196396 | 4.0 | -0.431429 | -0.328571 | -0.014286 | 0.020000 | ... | 3526.513999 | 21.682156 | 17.027383 | 13.846262 | 4.0 | 9.110958 | 11.100846 | 27.608693 | 40.770037 | TrialState.COMPLETE |

| 16 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8363309e454e72993f86f10c7fc7c137 | -0.185714 | -0.157143 | 0.196396 | 4.0 | -0.431429 | -0.328571 | -0.014286 | 0.020000 | ... | 3526.513999 | 21.682156 | 17.027383 | 13.846262 | 4.0 | 9.110958 | 11.100846 | 27.608693 | 40.770037 | TrialState.COMPLETE |

| 17 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8363309e454e72993f86f10c7fc7c137 | -0.185714 | -0.157143 | 0.196396 | 4.0 | -0.431429 | -0.328571 | -0.014286 | 0.020000 | ... | 3526.513999 | 21.682156 | 17.027383 | 13.846262 | 4.0 | 9.110958 | 11.100846 | 27.608693 | 40.770037 | TrialState.COMPLETE |

| 18 | Pipeline(model = LinearPerSegmentModel(fit_int... | 6f595f4f43b323804c04d4cea49c169b | -0.435714 | -0.414286 | 0.281668 | 4.0 | -0.754286 | -0.685714 | -0.164286 | -0.147143 | ... | 2681.501259 | 22.111993 | 21.624614 | 6.887034 | 4.0 | 14.197890 | 17.080865 | 26.655742 | 30.708428 | TrialState.COMPLETE |

| 19 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8363309e454e72993f86f10c7fc7c137 | -0.185714 | -0.157143 | 0.196396 | 4.0 | -0.431429 | -0.328571 | -0.014286 | 0.020000 | ... | 3526.513999 | 21.682156 | 17.027383 | 13.846262 | 4.0 | 9.110958 | 11.100846 | 27.608693 | 40.770037 | TrialState.COMPLETE |

20 rows × 43 columns

Let’s show only the columns we are interested in.

[22]:

tune.summary()[["hash", "pipeline", "SMAPE_mean", "state"]].sort_values("SMAPE_mean")

[22]:

| hash | pipeline | SMAPE_mean | state | |

|---|---|---|---|---|

| 19 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 17 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 16 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 15 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 14 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 13 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 12 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 10 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 11 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 2 | 7c7932114268832a5458acfecfb453fc | Pipeline(model = LinearPerSegmentModel(fit_int... | 9.210183 | TrialState.COMPLETE |

| 8 | 25dcd8bb095f87a1ffc499fa6a83ef5d | Pipeline(model = LinearPerSegmentModel(fit_int... | 9.943658 | TrialState.COMPLETE |

| 4 | e928929f89156d88ef49e28abaf55847 | Pipeline(model = LinearPerSegmentModel(fit_int... | 9.946866 | TrialState.COMPLETE |

| 0 | f4f02e1d5f60b8f322a4a8a622dd1c1e | Pipeline(model = LinearPerSegmentModel(fit_int... | 9.957781 | TrialState.COMPLETE |

| 18 | 6f595f4f43b323804c04d4cea49c169b | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.061742 | TrialState.COMPLETE |

| 1 | 3d7b7af16d71a36f3b935f69e113e22d | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.306909 | TrialState.COMPLETE |

| 9 | 3f1ca1759261598081fa3bb2f32fe0ac | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.554444 | TrialState.COMPLETE |

| 5 | 3b4311d41fcaab7307235ea23b6d4599 | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.756703 | TrialState.COMPLETE |

| 6 | 74065ebc11c81bed6a9819d026c7cd84 | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.917164 | TrialState.COMPLETE |

| 3 | b7ac5f7fcf9c8959626befe263a9d561 | Pipeline(model = LinearPerSegmentModel(fit_int... | 11.255320 | TrialState.COMPLETE |

| 7 | b0d0420255c6117045f8254bf8f377a0 | Pipeline(model = LinearPerSegmentModel(fit_int... | 11.478760 | TrialState.COMPLETE |

As we can see, we have duplicate lines according to the hash column. Some trials have the same sampled hyperparameters and they have the same results. We have a special handling for such duplicates: they are skipped during optimization and the previously computed metric values are returned.

Duplicates on the summary can be eliminated using hash column.

[23]:

tune.summary()[["hash", "pipeline", "SMAPE_mean", "state"]].sort_values("SMAPE_mean").drop_duplicates(subset="hash")

[23]:

| hash | pipeline | SMAPE_mean | state | |

|---|---|---|---|---|

| 19 | 8363309e454e72993f86f10c7fc7c137 | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.556535 | TrialState.COMPLETE |

| 2 | 7c7932114268832a5458acfecfb453fc | Pipeline(model = LinearPerSegmentModel(fit_int... | 9.210183 | TrialState.COMPLETE |

| 8 | 25dcd8bb095f87a1ffc499fa6a83ef5d | Pipeline(model = LinearPerSegmentModel(fit_int... | 9.943658 | TrialState.COMPLETE |

| 4 | e928929f89156d88ef49e28abaf55847 | Pipeline(model = LinearPerSegmentModel(fit_int... | 9.946866 | TrialState.COMPLETE |

| 0 | f4f02e1d5f60b8f322a4a8a622dd1c1e | Pipeline(model = LinearPerSegmentModel(fit_int... | 9.957781 | TrialState.COMPLETE |

| 18 | 6f595f4f43b323804c04d4cea49c169b | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.061742 | TrialState.COMPLETE |

| 1 | 3d7b7af16d71a36f3b935f69e113e22d | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.306909 | TrialState.COMPLETE |

| 9 | 3f1ca1759261598081fa3bb2f32fe0ac | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.554444 | TrialState.COMPLETE |

| 5 | 3b4311d41fcaab7307235ea23b6d4599 | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.756703 | TrialState.COMPLETE |

| 6 | 74065ebc11c81bed6a9819d026c7cd84 | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.917164 | TrialState.COMPLETE |

| 3 | b7ac5f7fcf9c8959626befe263a9d561 | Pipeline(model = LinearPerSegmentModel(fit_int... | 11.255320 | TrialState.COMPLETE |

| 7 | b0d0420255c6117045f8254bf8f377a0 | Pipeline(model = LinearPerSegmentModel(fit_int... | 11.478760 | TrialState.COMPLETE |

The second method top_k is useful when you want to check out best tried pipelines without duplicates.

[24]:

top_3_pipelines = tune.top_k(k=3)

[25]:

top_3_pipelines

[25]:

[Pipeline(model = LinearPerSegmentModel(fit_intercept = True, ), transforms = [LagTransform(in_column = 'target', lags = [14, 15, 16, 17, 18, 19, 20, 21, 22, 23], out_column = 'target_lag', ), DateFlagsTransform(day_number_in_week = False, day_number_in_month = True, day_number_in_year = False, week_number_in_month = True, week_number_in_year = False, month_number_in_year = False, season_number = False, year_number = False, is_weekend = True, special_days_in_week = (), special_days_in_month = (), out_column = 'date_flags', in_column = None, )], horizon = 14, ),

Pipeline(model = LinearPerSegmentModel(fit_intercept = True, ), transforms = [LagTransform(in_column = 'target', lags = [14, 15, 16, 17, 18, 19, 20, 21, 22, 23], out_column = 'target_lag', ), DateFlagsTransform(day_number_in_week = False, day_number_in_month = True, day_number_in_year = False, week_number_in_month = True, week_number_in_year = False, month_number_in_year = False, season_number = False, year_number = False, is_weekend = False, special_days_in_week = (), special_days_in_month = (), out_column = 'date_flags', in_column = None, )], horizon = 14, ),

Pipeline(model = LinearPerSegmentModel(fit_intercept = False, ), transforms = [LagTransform(in_column = 'target', lags = [14, 15, 16, 17, 18, 19, 20, 21, 22, 23], out_column = 'target_lag', ), DateFlagsTransform(day_number_in_week = True, day_number_in_month = False, day_number_in_year = True, week_number_in_month = False, week_number_in_year = False, month_number_in_year = False, season_number = False, year_number = True, is_weekend = False, special_days_in_week = (), special_days_in_month = (), out_column = 'date_flags', in_column = None, )], horizon = 14, )]

2. General AutoML#

Hyperparameters tuning is useful, but can be too narrow. In this section we move our attention to general AutoML pipeline. In ETNA we have an etna.auto.Auto class for making automatic pipeline selection. It can be useful to quickly create a good baseline for your forecasting task.

2.1 How Auto works#

Auto init has similar parameters to Tune, but instead of pipeline it works with pool. Pool, in general, is just a list of pipelines.

During fit there are two stages:

pool stage,

tuning stage.

Pool stage is responsible for checking every pipeline suggested in a given pool. For each pipeline we run a backtest and compute target_metric. Results are saved in optuna study.

Tuning stage takes tune_size best pipelines according to the resuls of the pool stage. And then runs Tune with default params_to_tune for them sequentially from best to the worst.

Limit parameters n_trials and timeout are shared between pool and tuning stages. First, we run pool stage with given n_trials and timeout. After that, the remaining values are divided equally among tune_size tuning steps.

2.2 Example#

We will move stright to the example.

[26]:

from etna.auto import Auto

auto = Auto(target_metric=SMAPE(), horizon=HORIZON, backtest_params=dict(n_folds=5))

We used mostly default parameters, even pool. There is also a default sampler, but to make results more reproducible we fixed the seed.

Let’s start the fitting. We can start by running only pool stage.

[27]:

%%capture

best_pool_pipeline = auto.fit(ts=ts, tune_size=0)

[28]:

auto.summary()[["hash", "pipeline", "SMAPE_mean", "state", "study"]].sort_values("SMAPE_mean")

[28]:

| hash | pipeline | SMAPE_mean | state | study | |

|---|---|---|---|---|---|

| 10 | af8088ac0abfde46e93a8dbb407a2117 | Pipeline(model = CatBoostPerSegmentModel(itera... | 5.057438 | TrialState.COMPLETE | pool |

| 19 | d8215d95e2c6c9a4b4fdacf3fa77dddc | Pipeline(model = NaiveModel(lag = 7, ), transf... | 5.164436 | TrialState.COMPLETE | pool |

| 14 | 8f640faabcac0552153ca19337179f3b | Pipeline(model = HoltWintersModel(trend = 'add... | 5.906624 | TrialState.COMPLETE | pool |

| 17 | d6a44adb551f1aec09ef37c14aed260f | Pipeline(model = SeasonalMovingAverageModel(wi... | 6.197182 | TrialState.COMPLETE | pool |

| 1 | 16eb77200eb2fd5dc1f6f2a5067884cd | Pipeline(model = HoltWintersModel(trend = 'add... | 6.374571 | TrialState.COMPLETE | pool |

| 8 | 4c07749e913403906cd033e4882fc4f9 | Pipeline(model = SeasonalMovingAverageModel(wi... | 6.529721 | TrialState.COMPLETE | pool |

| 13 | 6e2eb71d033b6d0607f5b6d0a7596ce9 | Pipeline(model = ProphetModel(growth = 'linear... | 7.767201 | TrialState.COMPLETE | pool |

| 15 | a640ddfb767ea0cbf31751ddda6e36ee | Pipeline(model = CatBoostMultiSegmentModel(ite... | 7.801582 | TrialState.COMPLETE | pool |

| 7 | 6bb58e7ce09eab00448d5732240ec2ec | Pipeline(model = CatBoostMultiSegmentModel(ite... | 7.803701 | TrialState.COMPLETE | pool |

| 6 | cfeb21bcf2e922a390ade8be9d845e0d | Pipeline(model = ProphetModel(growth = 'linear... | 7.892242 | TrialState.COMPLETE | pool |

| 16 | 2e36e0b9cb67a43bb1bf96fa2ccf718f | Pipeline(model = LinearMultiSegmentModel(fit_i... | 9.205423 | TrialState.COMPLETE | pool |

| 5 | 8b9f5fa09754a80f17380dec2b998f1d | Pipeline(model = LinearPerSegmentModel(fit_int... | 10.997462 | TrialState.COMPLETE | pool |

| 11 | d62c0579459d4a1b88aea8ed6effdf4e | Pipeline(model = MovingAverageModel(window = 1... | 11.317256 | TrialState.COMPLETE | pool |

| 3 | 5916e5b653295271c79caae490618ee9 | Pipeline(model = MovingAverageModel(window = 2... | 12.028916 | TrialState.COMPLETE | pool |

| 12 | 5a91b6c8acc2c461913df44fd1429375 | Pipeline(model = ElasticPerSegmentModel(alpha ... | 12.213320 | TrialState.COMPLETE | pool |

| 2 | 403b3e18012af5ff9815b408f5c2e47d | Pipeline(model = MovingAverageModel(window = 4... | 12.243011 | TrialState.COMPLETE | pool |

| 9 | 6cf8605e6c513053ac4f5203e330c59d | Pipeline(model = HoltWintersModel(trend = None... | 14.969618 | TrialState.COMPLETE | pool |

| 18 | 53e90ae4cf7f1f71e6396107549c25ef | Pipeline(model = NaiveModel(lag = 1, ), transf... | 19.361078 | TrialState.COMPLETE | pool |

| 4 | 90b31b54cb8c01867be05a3320852682 | Pipeline(model = ElasticMultiSegmentModel(alph... | 35.971326 | TrialState.COMPLETE | pool |

| 0 | a5e036978ef9cc9f297c9eb2c280af05 | Pipeline(model = AutoARIMAModel(), transforms ... | NaN | TrialState.RUNNING | pool |

We can continue our training. The pool stage is over and there will be only the tuning stage. If we don’t want to wait forever we should limit the tuning by fixing n_trials or timeout.

We also set some parameters for optuna.Study.optimize:

gc_after_trial=True: to preventfitfrom increasing memory consumptioncatch=(Exception,): to prevent failing if some trials are erroneous.

[29]:

%%capture

best_tuning_pipeline = auto.fit(ts=ts, tune_size=3, n_trials=100, gc_after_trial=True, catch=(Exception,))

Let’s look at the results.

[30]:

auto.summary()[["hash", "pipeline", "SMAPE_mean", "state", "study"]].sort_values("SMAPE_mean").drop_duplicates(

subset=("hash", "study")

).head(10)

[30]:

| hash | pipeline | SMAPE_mean | state | study | |

|---|---|---|---|---|---|

| 56 | 419fc80cf634ba0888c4f899f666ad45 | Pipeline(model = HoltWintersModel(trend = 'mul... | 4.852890 | TrialState.COMPLETE | tuning/8f640faabcac0552153ca19337179f3b |

| 10 | af8088ac0abfde46e93a8dbb407a2117 | Pipeline(model = CatBoostPerSegmentModel(itera... | 5.057438 | TrialState.COMPLETE | pool |

| 34 | 7fa4e05b62a79bdb0730826d5e337f6c | Pipeline(model = CatBoostPerSegmentModel(itera... | 5.072415 | TrialState.COMPLETE | tuning/af8088ac0abfde46e93a8dbb407a2117 |

| 23 | 382825866425cac211691205a9537c95 | Pipeline(model = CatBoostPerSegmentModel(itera... | 5.081583 | TrialState.COMPLETE | tuning/af8088ac0abfde46e93a8dbb407a2117 |

| 46 | 3737af6845dceb580046dca8c167792a | Pipeline(model = CatBoostPerSegmentModel(itera... | 5.094663 | TrialState.COMPLETE | tuning/af8088ac0abfde46e93a8dbb407a2117 |

| 43 | e12d351fb8cd2627612e1b9602da0a41 | Pipeline(model = CatBoostPerSegmentModel(itera... | 5.128972 | TrialState.COMPLETE | tuning/af8088ac0abfde46e93a8dbb407a2117 |

| 39 | 4ad7a6a588941fc808878f0d15290cae | Pipeline(model = CatBoostPerSegmentModel(itera... | 5.161434 | TrialState.COMPLETE | tuning/af8088ac0abfde46e93a8dbb407a2117 |

| 79 | d8215d95e2c6c9a4b4fdacf3fa77dddc | Pipeline(model = NaiveModel(lag = 7, ), transf... | 5.164436 | TrialState.COMPLETE | tuning/d8215d95e2c6c9a4b4fdacf3fa77dddc |

| 19 | d8215d95e2c6c9a4b4fdacf3fa77dddc | Pipeline(model = NaiveModel(lag = 7, ), transf... | 5.164436 | TrialState.COMPLETE | pool |

| 32 | 7a0ae80fd698cc464237d5b8034ebd69 | Pipeline(model = CatBoostPerSegmentModel(itera... | 5.185379 | TrialState.COMPLETE | tuning/af8088ac0abfde46e93a8dbb407a2117 |

[31]:

auto.top_k(k=5)

[31]:

[Pipeline(model = HoltWintersModel(trend = 'mul', damped_trend = False, seasonal = 'mul', seasonal_periods = None, initialization_method = 'estimated', initial_level = None, initial_trend = None, initial_seasonal = None, use_boxcox = True, bounds = None, dates = None, freq = None, missing = 'none', smoothing_level = None, smoothing_trend = None, smoothing_seasonal = None, damping_trend = None, ), transforms = [], horizon = 14, ),

Pipeline(model = CatBoostPerSegmentModel(iterations = None, depth = None, learning_rate = None, logging_level = 'Silent', l2_leaf_reg = None, thread_count = None, ), transforms = [LagTransform(in_column = 'target', lags = [15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28], out_column = None, ), DateFlagsTransform(day_number_in_week = True, day_number_in_month = True, day_number_in_year = False, week_number_in_month = False, week_number_in_year = True, month_number_in_year = False, season_number = False, year_number = False, is_weekend = True, special_days_in_week = (), special_days_in_month = (), out_column = None, in_column = None, )], horizon = 14, ),

Pipeline(model = CatBoostPerSegmentModel(iterations = None, depth = 7, learning_rate = 0.05983113562173073, logging_level = 'Silent', l2_leaf_reg = 0.6375370292626799, thread_count = None, random_strength = 0.0011149264989700346, ), transforms = [LagTransform(in_column = 'target', lags = [15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28], out_column = None, ), DateFlagsTransform(day_number_in_week = True, day_number_in_month = True, day_number_in_year = True, week_number_in_month = False, week_number_in_year = True, month_number_in_year = False, season_number = False, year_number = False, is_weekend = True, special_days_in_week = [], special_days_in_month = [], out_column = None, in_column = None, )], horizon = 14, ),

Pipeline(model = CatBoostPerSegmentModel(iterations = None, depth = 8, learning_rate = 0.029265104573384305, logging_level = 'Silent', l2_leaf_reg = 0.2664339575790138, thread_count = None, random_strength = 0.00018293439416272825, ), transforms = [LagTransform(in_column = 'target', lags = [15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28], out_column = None, ), DateFlagsTransform(day_number_in_week = True, day_number_in_month = False, day_number_in_year = False, week_number_in_month = False, week_number_in_year = False, month_number_in_year = False, season_number = False, year_number = False, is_weekend = True, special_days_in_week = [], special_days_in_month = [], out_column = None, in_column = None, )], horizon = 14, ),

Pipeline(model = CatBoostPerSegmentModel(iterations = None, depth = 9, learning_rate = 0.06097816249847619, logging_level = 'Silent', l2_leaf_reg = 0.6251067228498621, thread_count = None, random_strength = 0.005498689821514818, ), transforms = [LagTransform(in_column = 'target', lags = [15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28], out_column = None, ), DateFlagsTransform(day_number_in_week = False, day_number_in_month = False, day_number_in_year = False, week_number_in_month = True, week_number_in_year = False, month_number_in_year = False, season_number = False, year_number = True, is_weekend = True, special_days_in_week = [], special_days_in_month = [], out_column = None, in_column = None, )], horizon = 14, )]

If we look at study column we will see that best trial from tuning stage is better then best trial from pool stage. It means, that tuning stage was successful and improved the final result.

Let’s compare best pipeline on pool and tuning stages on hold-out part of initial ts.

[32]:

%%capture

best_pool_metrics, _, _ = best_pool_pipeline.backtest(ts=full_ts, metrics=[SMAPE()], n_folds=5)

best_tuning_metrics, _, _ = best_tuning_pipeline.backtest(ts=full_ts, metrics=[SMAPE()], n_folds=5)

[33]:

best_pool_smape = best_pool_metrics["SMAPE"].mean()

best_tuning_smape = best_tuning_metrics["SMAPE"].mean()

print(f"Best pool SMAPE: {best_pool_smape:.3f}")

print(f"Best tuning SMAPE: {best_tuning_smape:.3f}")

Best pool SMAPE: 8.262

Best tuning SMAPE: 8.188

As we can see, the results are slightly better after the tuning stage, but it can be statistically insignificant. For your datasets the results could be different.

2.3 Using custom pipeline pool#

We can define our own set of pipelines for the search.

[34]:

pool = [

Pipeline(model=NaiveModel(lag=1), transforms=(), horizon=HORIZON),

Pipeline(

model=LinearPerSegmentModel(),

transforms=[LagTransform(in_column="target", lags=list(range(HORIZON, 2 * HORIZON)), out_column="target_lag")],

horizon=HORIZON,

),

]

[35]:

%%capture

auto = Auto(

target_metric=SMAPE(),

horizon=HORIZON,

pool=pool,

backtest_params=dict(n_folds=1),

storage="sqlite:///etna-auto-custom.db",

)

best_pool_pipeline = auto.fit(ts=ts, tune_size=0)

[36]:

auto.summary()[["hash", "pipeline", "SMAPE_mean", "state", "study"]].sort_values("SMAPE_mean")

[36]:

| hash | pipeline | SMAPE_mean | state | study | |

|---|---|---|---|---|---|

| 1 | d4b50dc4c1b7debb0355ebfbd9c39ffb | Pipeline(model = LinearPerSegmentModel(fit_int... | 8.587004 | TrialState.COMPLETE | pool |

| 0 | 53e90ae4cf7f1f71e6396107549c25ef | Pipeline(model = NaiveModel(lag = 1, ), transf... | 22.155640 | TrialState.COMPLETE | pool |

3. Summary#

In this notebook we discussed how AutoML works in ETNA library and how to use it. There are two supported scenarios:

Tuning your existing pipeline;

Automatic search of the pipeline for your forecasting task.